Paper accepted in Language and Speech!

Congratulations to Ronny on his first journal publication from his PhD project! Want to know how people use lip movements and simple hand gestures to perceive lexical stress? Read it here!

What’s it about?

For Ronny’s first-year project, he video-recorded his PhD supervisor saying simple Dutch words that critically differed in which syllable carries stress: for instance CONtent [noun] vs. conTENT [adjective]. The talker sometimes did and sometimes didn’t produce a simple beat gesture on the word he was saying. Using audio- and video-editing techniques, Ronny created artificial videos that allowed him to play around with the auditory and visual cues to stress in all possible combinations. For instance, he created videos in which the talker’s voice was saying CONtent, but the talker’s face was from a recording of conTENT, while the talker’s body was from a recording where the talker produced a beat gesture on the first syllable (biasing towards CONtent again). He then gave these manipulated videos to several groups of native Dutch participants and asked them what they thought the speaker said.

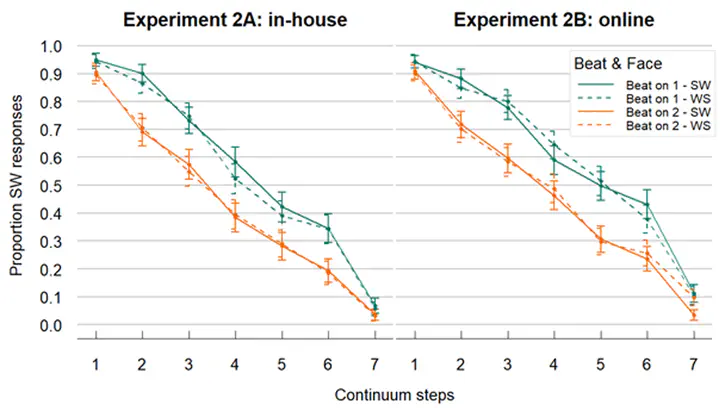

Results showed that people primarily relied on auditory cues to stress as well as visual cues about the timing of simple hand gestures. However, despite successfully demonstrating that people could tell apart the lip movements in a CONtent video from the lip movements in a conTENT video (i.e., when there’s no audio), we did not find that people used those visual articulatory cues to stress in audiovisual videos (i.e., with audio).

Why is this important?

When it comes to perceiving vowels and consonants in speech, visual articulatory cues play an important role. Seeing a person’s face can greatly help with speech perception in noisy listening conditions and can even mislead you to hear things that weren’t said at all. Our paper, however, suggests that visual articulatory cues to stress are not heavily relied on in audiovisual speech perception. This is striking because we showed that those cues are informative and could have helped participants make their decisions. As such, these outcomes demonstrate that people weigh the multisensory cues in the audiovisual input signal differently, presumably depending on their reliability.

Is that it?

Interestingly, the timing of simple hand gestures did contribute consistently to participants’ perceptual decisions: a beat gesture timed on the first syllable biased participants to hear CONtent, while the same gesture but timed on the second syllable biased participants to hear conTENT. This replicates earlier work from our group but importantly also extends it by using more natural gestures, more realistic presentation size, and richer stimuli (i.e., with the talker’s face visible).

Full reference

The full citation, open access PDF, and all data are publicly available from the links below:

(2024). Audiovisual perception of lexical stress: Beat gestures and articulatory cues. Language and Speech, 68(1), 181-203. doi:10.1177/00238309241258162.